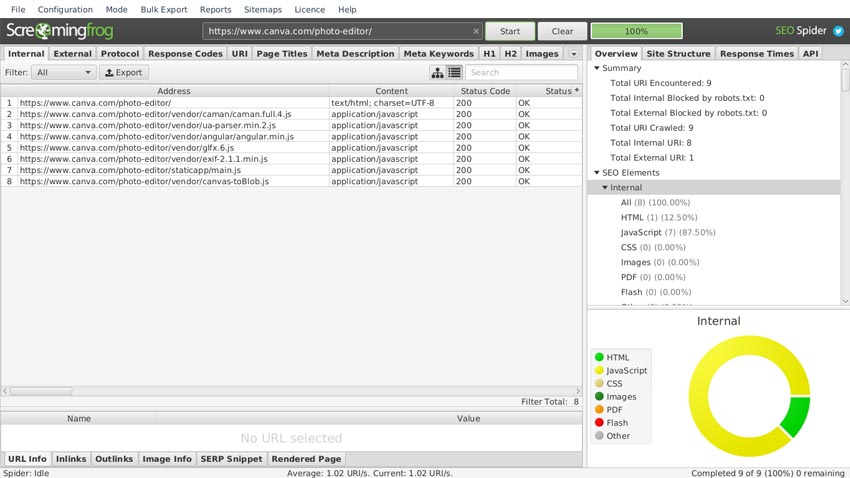

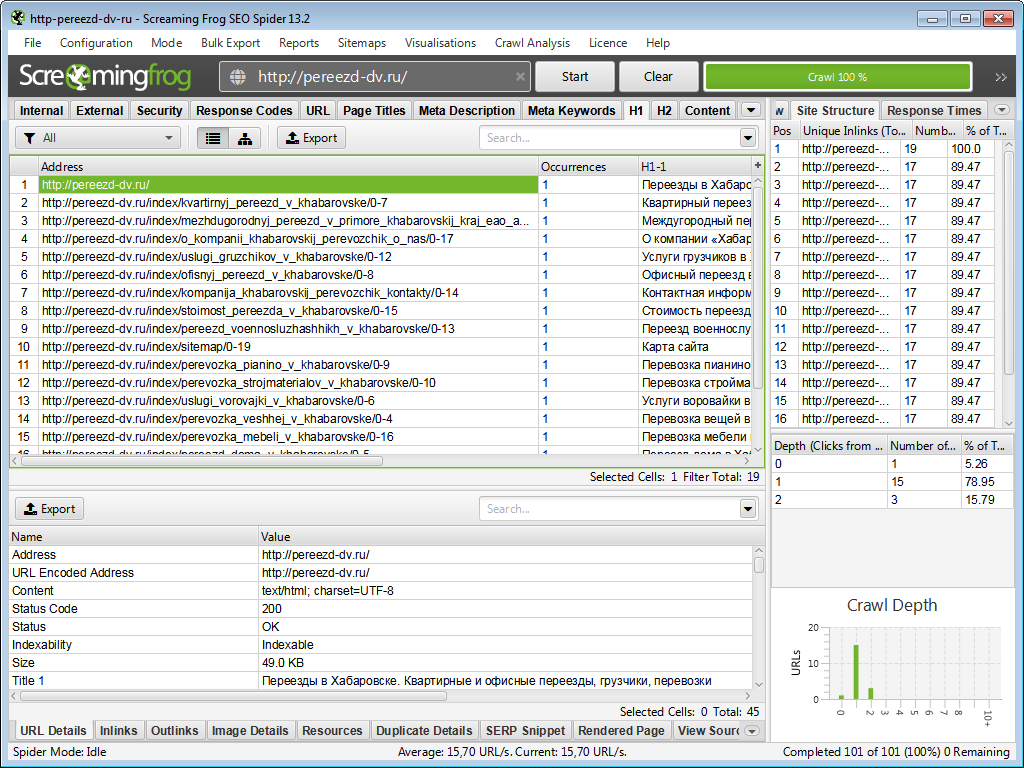

This makes file sizes and data exports a little easier to control. When crawling larger sites, it's sometimes best to limit the crawler to a subset of URLs in order to gather a decent representative sample of data. It's a good idea to examine what kind of information you're looking for, how big the site is, and how much of the site you'll need to crawl in order to get it all before starting a crawl. However, in this guide, I will be using the free version since we don’t really need the paid version because a lot of the things can be done without having Google Analytics or Google Search Console integrated. The problem with not being able to do those things is that you’re going to have a harder time being able to pull all the analytics into the crawl. One thing to take note of with the free version is the fact that you can’t do Google Analytics Integration and Search Console Integration. You can purchase a license to remove the 500 URL crawl limit, expand the setup options, and gain access to more advanced capabilities. Then, double-click the SEO Spider installation file you have downloaded and follow the installer's instructions.

Simply click the download button to get started: Download Now.

It runs on Windows, Mac OS X, and Ubuntu. To get started, download and install the SEO Spider, which is free and allows you to crawl up to 500 URLs at once.

0 kommentar(er)

0 kommentar(er)